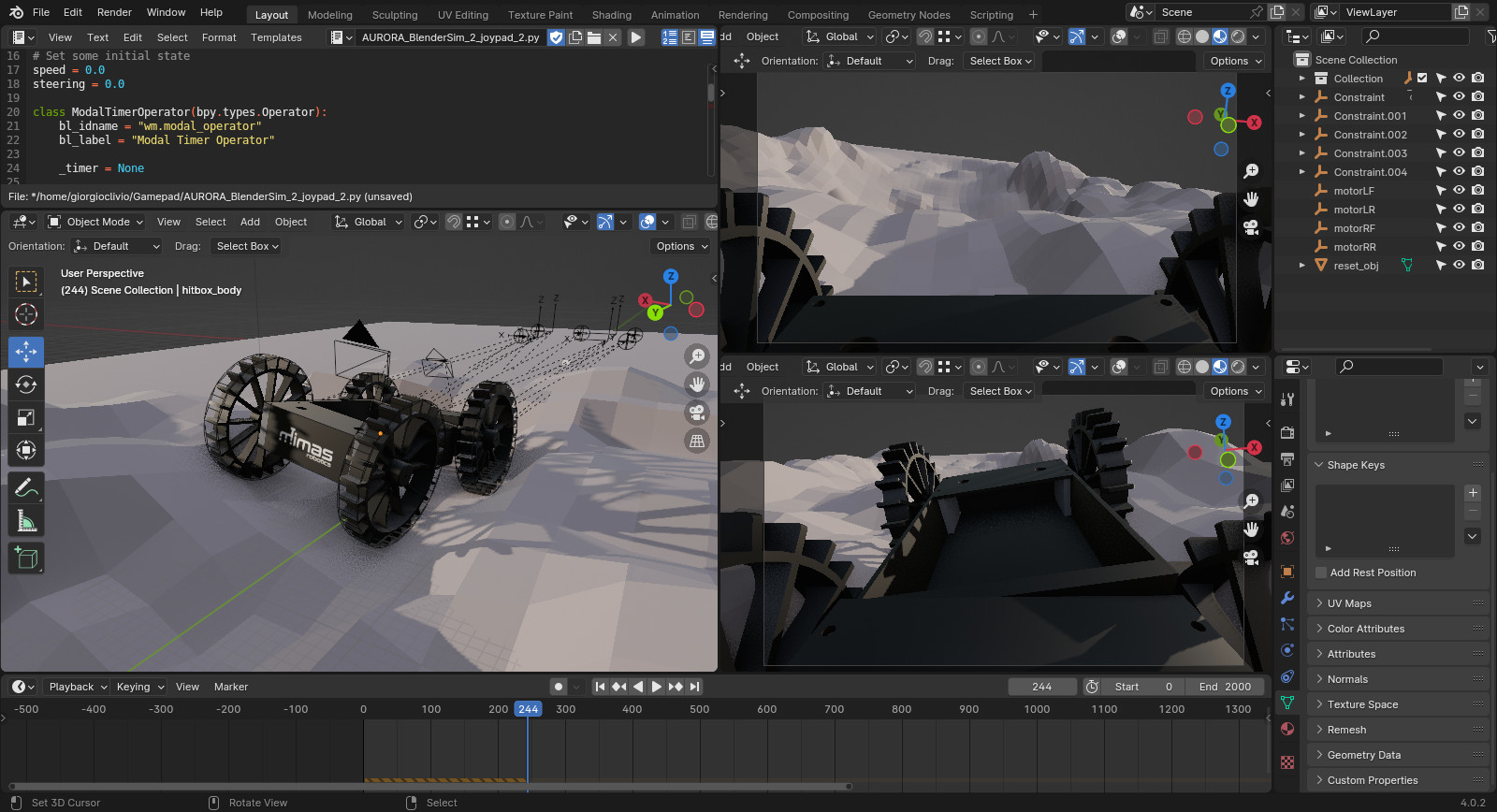

Real-time simulations using Blender and Python

Watch: Aurora Rover: Real-time simulation using Blender and Python

Watch: Aurora Rover: Real-time simulation using Blender and Python

In robotics, real-time simulations are invaluable for testing and refining algorithms, control strategies, and overall system behaviour before deploying solutions to physical robots. I have almost 10 years of experience with Blender, so I decided to use this software, instead of Gazibo or other similar tools. Blender, with its powerful real-time simulation capabilities and powerful render engine, allowed me to interactively control and visualize a robot's movements in a simulated environment allowing me to rapidly iterate on designs, experiment with various scenarios, and fine-tune control parameters in real time. Using a single software for designing the robot, making renderings and animations, but also run physically accurate simulations, not only accelerates the development cycle but also facilitates a seamless transition from simulation to physical implementation.

Transitioning to 6061 Aluminium

Watch: 1st attempt CNC machining Continuity's parts

Watch: 1st attempt CNC machining Continuity's parts

Continuity is currently crafted from 3D-printed PLA plastic, a good material in terms of prototyping flexibility, allowing easy modifications and adjustments. However, as we delve deeper into the mechanical demands, the limitations of PLA become apparent. Achieving structural strength requires high infill or an increase of the external wall count, yet this proves challenging for smaller, intricate parts, where PLA's fragility under load becomes a concern. With the assistance of Edinburgh Napier University's Mechanical Workshop technicians, we are embarking on a transformation. All structural components are undergoing a shift from PLA to robust 6061 Aluminium. This transition necessitates a complete redesign: I used Blender to 3D-model all the pieces, but differently from a 3D printer, CNC machines don't like STL files. To bridge the gap between Blender's polygon-based designs and CNC machines' preference for STEP files, I opted for FreeCad, aligning with my philosophy of using an open-source approach. The photo showcases one of the two top links for Continuity's legs, machined as a test using a CNC machine. The result is good, but not as good as it will be on the actual finished version.

Joypad integration

During the last few weeks of testing, I integrated a joypad into Continuity's control methods. This was done to simplify testing on the robot, improving precision and making things a bit more fun when it comes to having a quick demo available to show to people (this happened multiple times, in the past few weeks). I chose to connect a PS3 joypad using the comprehensive features offered by the "Gamepad" GitHub repository. This repository not only simplifies the process but also provides users with multiple joypad configurations, catering to diverse testing needs. In an initial trial, I focused on controlling the Remote Sensing Mast (RSM), a pivotal element of the rover that comes with two degrees of freedom: azimuth rotation and elevation. The RSM serves critical functions such as capturing photos, and panoramic images and facilitating autonomous navigation by aligning with the direction of movement. Subsequent tests focused on legs extension and other general movements, all executed seamlessly through joypad control. While these tests may appear useless given Continuity's ultimate autonomous functionality, being able to control the robot in a user-friendly way is actually extremely important for something that I'm working on aside. I'm trying to develop a simulation framework using Blender, the same open-source software I used for 3D modelling Continuity. Blender is capable of running rigid body simulations and being able to interface them with a Python script might allow me to use it as a real-time simulation tool. More updates will follow on this topic.