Watch: Aurora Rover: Real-time simulation using Blender and Python

Watch: Aurora Rover: Real-time simulation using Blender and Python

In robotics, real-time simulations are invaluable for testing and refining algorithms, control strategies, and overall system behaviour before deploying solutions to physical robots. I have almost 10 years of experience with Blender, so I decided to use this software, instead of Gazibo or other similar tools. Blender, with its powerful real-time simulation capabilities and powerful render engine, allowed me to interactively control and visualize a robot's movements in a simulated environment allowing me to rapidly iterate on designs, experiment with various scenarios, and fine-tune control parameters in real time. Using a single software for designing the robot, making renderings and animations, but also run physically accurate simulations, not only accelerates the development cycle but also facilitates a seamless transition from simulation to physical implementation.

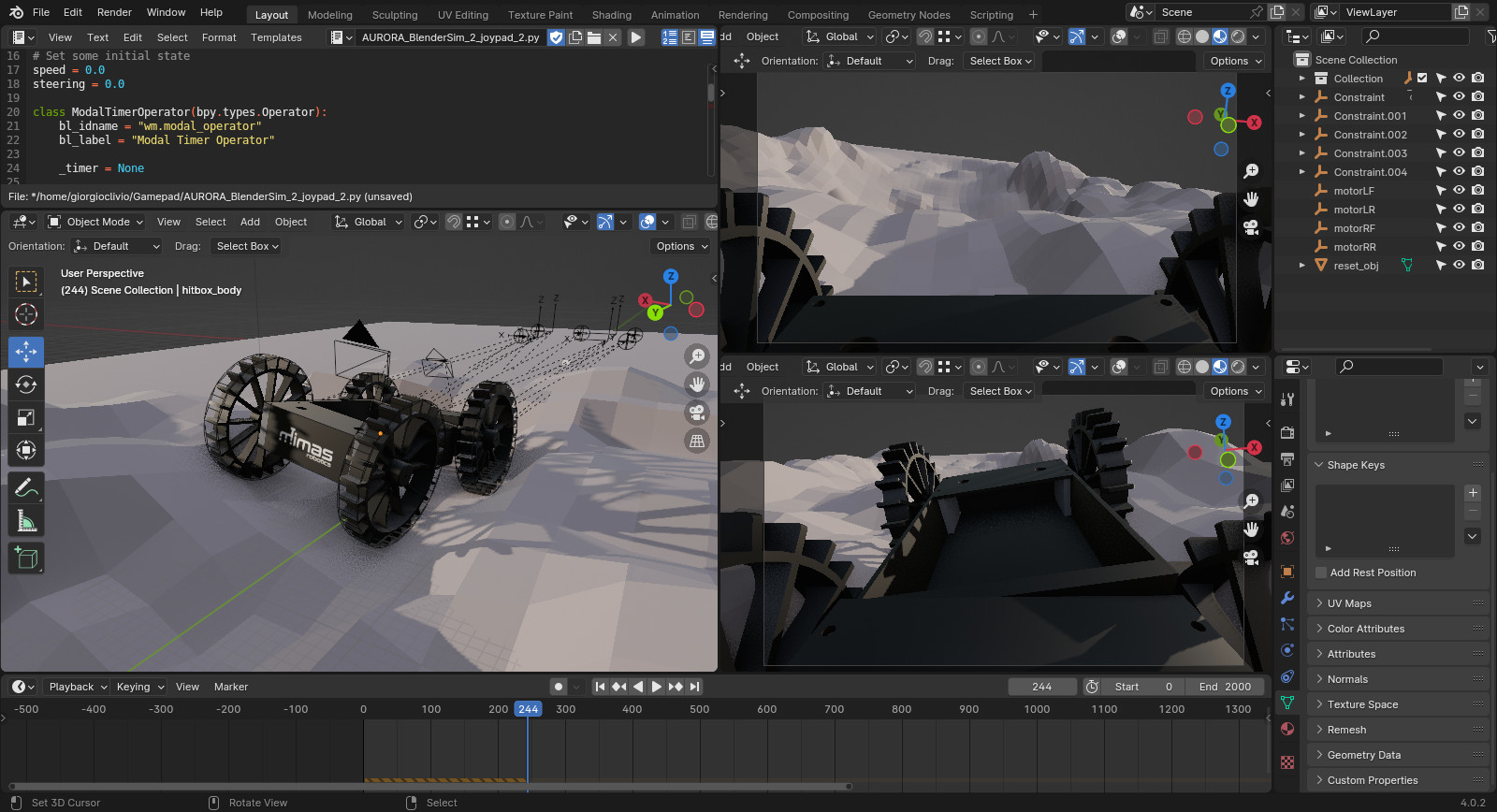

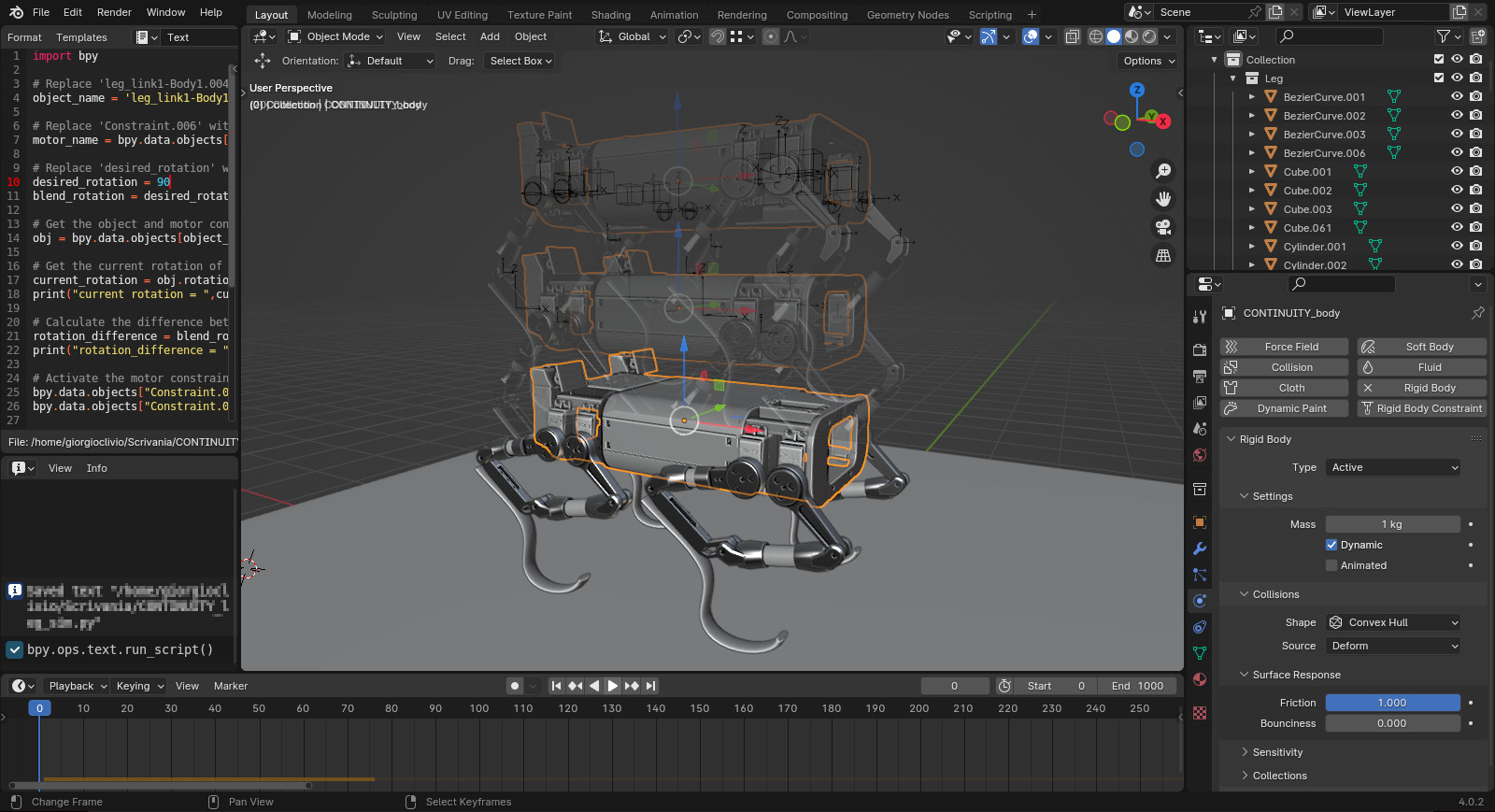

Before simulating Continuity, in which joints have complex mechanical interactions with each other, I worked on AURORA (Ad-hoc Unit for Robotics Operations, Research and Analysis), a small rover I designed and am using for testing. I started setting all elements of the robot as active Rigid Bodies, and the floor as passive. The wheels are controlled using a Python script directly inside Blender. The script focuses on enabling real-time interaction with the simulation through a modal operator in Blender, continuously updating based on gamepad input ("Gamepad" GitHub Repository).

The script defines a modal operator named ModalTimerOperator, which responds to timer events and joystick input. The gamepad's axes, denoting speed and steering, are utilized to control four motors (motorLF, motorRF, motorLR, and motorRR) of a simulated robot. The operator continuously updates the motor velocities based on the gamepad input, allowing for real-time control. The modal method of the operator handles the core functionality, continuously monitoring events.

The modal operator runs continuously, responding to joystick input and dynamically updating the robot's motion. The script can also calculate the distance to the nearest obstacle from the robot, using a sensor placed in front of it (SensorFRONT). The calculated distance is then printed to the console.

This method is still quite rudimental, but runs in real-time with no issues and thanks to its modularity, I was able to transfer it to Continuity quite easily. There's still a huge amount of work to do on it, but it will be released as open-source on my GitHub soon.